If we think of the world as a book, then augmented reality is the digital magnifying glass that enables us to explore the details behind every word, letter, and punctuation mark — right down to the granular texture of the page itself. AR layers context onto an interface that you can see and understand. It blends two different realms, the real, physical world you see with your eyes and the world you see on your device in an interface that creates a new layer that’s as familiar as the phone in your hand.

When married to image recognition and machine vision, AR opens a whole new dimension of possibilities. Museums specifically are pushing the envelope with AR and showcasing the technology’s potential through creative implementation. They’re using AR for everything from wayfinding to bringing objects to life to developing entirely new, digital artworks. When you layer contextual information on top of objects, products, or places, you end up with a seamless, magical experience and the cultural sector is proving the limitless possibilities. Let’s take a look.

Journeys into the world of AR

Mobile, location-aware technologies, and machine vision – these are a few things I’m obsessed with. And then came AR. Last year, my team ramped up our exploration into AR with Apple’s ARKit and a close partnership with Pérez Art Museum Miami [which featured in Museum’s + Heritage Advisor’s feature Technology in Museums – introducing new ways to see the cultural world] generously supported by the Knight Foundation. Through our joint efforts, the first museum exhibition to use ARKit was born.

I’m incredibly excited about the potential that technology such as AR unleashes in production of culture, but it goes without saying, that we’re fundamentally interested in how it can be used to elevate the existing visual culture around us. For this, the melding of AR and machine vision was a clear and obvious opportunity.

In the words of Benedict Evans of Andreessen Horowitz: “We’re going from computers with cameras that take photos, to computers with eyes that can see.” And I’m excited about a world where those eyes bring knowledge, stories, and meaning to the culture around you.

Bringing existing data to life

How can we utilise these tools to deliver content in a simple and compelling way? How can we leverage existing content, which is both more abundant and scalable, rather than rely on the creation of new content? With these questions in mind, we wanted to focus on what we’ve been calling “minimum viable content;” the least amount of content possible required to provide a valuable visitor experience.

Museums are overflowing reservoirs of data and, today, are eager to open the floodgates for the world to tap in, and build new avenues and layers to distribute this knowledge. We focused only on existing content under the mindset that AR could amplify basic content with no additional production time or costs. We wanted to show that this could be accessible for places of all shapes and sizes, from your local historical society to the most art museums around the world.

A new shovel in cultural sandbox (image recognition support)

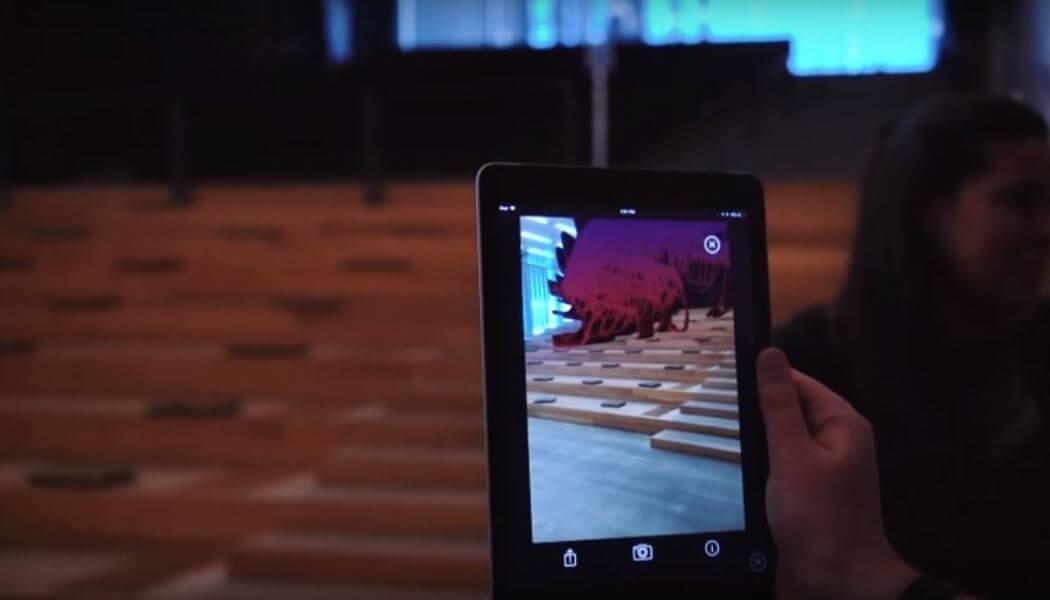

When version 1.5 of ARKit opened beta testing, mobile developers around the world jumped in to start experimenting and building prototypes. We were especially interested in the added image recognition support. We tested on screens, printed out dozens of well-known paintings, turning our office into a museum sandbox to test the technology in a real space, with a variety of wall angles, surfaces, and lightning conditions.

After early testing yielded promising results, we took our prototype out into the wild (a local museum). Being able to test our prototype in a museum environment was an incredibly important part of the process, to ensure that everything worked smoothly in a real-world setting, including drastic variations in lighting, reflection, interference from nearby pieces, and other visitors in the space.

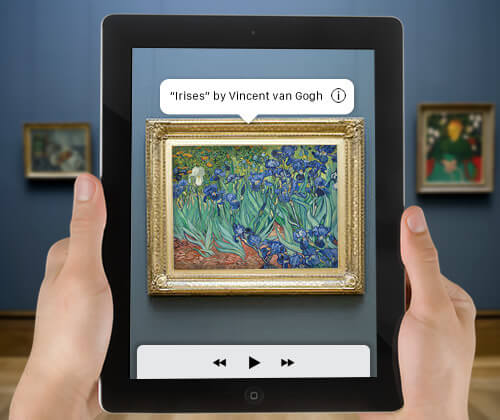

Once we nailed image recognition with the paintings, we took it to the next level. Imagine walking through a gallery chock-full of paintings. Not only could you pull up information about each work, but your phone could also recognise and label every painting instantly, right at your fingertips.

One of the unexpected aspects of our testing, was the excitement from visitors who approached us intrigued by what they were seeing on our screens. It was encouraging to see that people from different walks of life found this demonstration of AR to be helpful and inspiring.

Removing friction from experience

Walking through a museum, historic site, or any type of cultural attraction, surrounds you with countless opportunities to experience and learn from the things around you. Over time, museums have developed different ways to convey that information, ranging from descriptive wall labels, audio guides, interactive screens, and more. Much has changed over the past few years including how the average consumer communicates, socialises, and sees the world around them. Nobody needs to tell you: the future and the now is mobile. With new technologies transforming our desire for increasing levels of ease and convenience, there are still some hard itches we’ve needed to scratch.

Last month I went to the recently opened San Francisco Museum of Modern Art and wanted to know everything about the art and various installations, beyond what was posted on the walls. I felt as if I should be able to lift my phone and get more details on the process of the creation of the art work, rather than having to type a search term into my browser

Spot on. Given the advancements in mobile experience and the ever-increasing consumer expectations, why should this friction exist?

What we created and what we saw, proved a new path forward for simple, seamless, and solid experiences. For AR paired with machine vision, the value proposition for museums-goers is clear to see: museums and spatial computing go together like peanut butter and jelly.

Museums are “experience factories” and also some of the most interesting sandboxes for UX experimentation. Over the years, tech giants including Google, Apple, Microsoft, and HTC have all leveraged museums in some capacity as a testing ground for new experiential technologies. With AR, we’ll see even more of this to come as brands are eager to enter the new arena while also benefiting from public’s positive attitudes towards these trusted and prestigious institutes.

Why now?

AR and image recognition technologies have been around for years, mostly in separate forms, and we’ve been experimenting with both since our inception. So, what’s special about where things are now?

The recent investment in AR by major tech platforms has yielded a never-before seen level of quality, stability, and ultimately, user experience. Recent developments around Apple’s ARKit and Google’s ARCore, have made it faster and easier for app developers to roll out new experiences including on-device image recognition without a costly license or heavy SDKs of a 3rd party. Going the free, open-source route meant burning time and resources with libraries like OpenCV or working with pHash methods. The introduction of this support in ARKit 1.5 was a killer add-on.

Augmented reality holds an abundance of untapped potential for museums and attractions, it may be hard to imagine what comes next, but our bet is that augmented reality will become the digital magnifying glass for the physical world, and assailant in the death of the traditional guided tour, audio guide, or wall label.

Companies like Apple and Google are putting vast resources behind augmented reality and encouraging developers like us to experiment. While we’re seeing a significant amount of innovation with AR it’s hard to tell how these behemoths will implement and integrate the technology into many of our everyday tools beyond native cameras, maps, and how exactly it will impact the world or our lives, but it’s easy to guess that will impact us in many ways we can’t even begin to dream up.

‘Vision is the art of seeing what is invisible to others’

What we can infer is that today you are the cursor, AR is the magnifying glass, and the physical world is the canvas. Advancements in your phone’s camera will unlock a new browsing experience – AR, machine vision, and visual search will help annotate the world around you every step of the way. Inquiring minds will have the option to learn more and effortlessly dig deep. For the weary tourist, it will make consumption and exploration of new sites more digestible, in a language and interface that’s familiar to them. For the experienced traveller, AR will add a new layer of information within their line of vision for them to delve as deep as they please.

How we think about designing experience, and displaying and consuming information will significantly change. As the fluidity increases between the real world that we see with our eyes and the digital world on our screen, AR will shift from mere novelty to absolute necessity.

A version of this blog first appeared on Venture Beat.